High Performance Computing

2021-04-23

1 Basics

1.1 Performance

1.1.1 Scalability

In summary,

- Strong scaling concerns the speedup for a fixed problem size with respect to the number of processors, and is governed by Amdahl’s law.

- Weak scaling concerns the speedup for a scaled problem size with respect to the number of processors, and is governed by Gustafson’s law.

Reference: Scalability: strong and weak scaling

2 Network

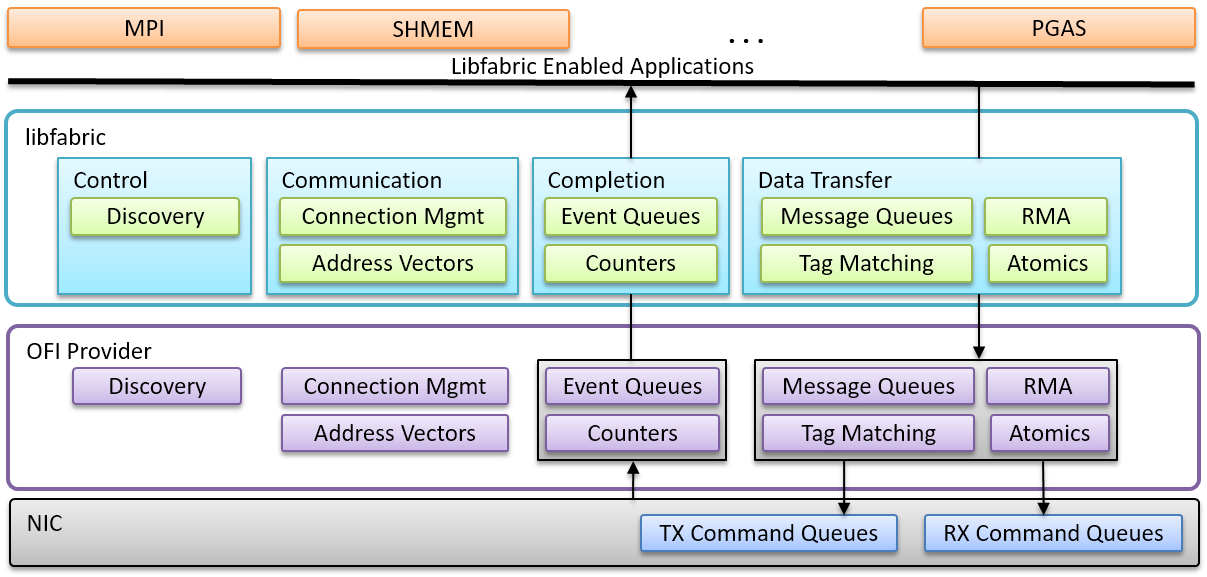

In HPC, the network model is MPI-libfabrics-NIC.

OpenFabrics Interfaces (OFI) is a framework focused on exporting fabric communication services to applications. Libfabric is a core component of OFI.

OpenFabrics Enterprise Distribution (OFED).

Mellanox OFED (MOFED) is Mellanox’s implementation of the OFED libraries and kernel modules.

InfiniBand refers to two distinct things. The first is a physical link-layer protocol for InfiniBand networks. The second is a higher level programming API called the InfiniBand Verbs API. The InfiniBand Verbs API is an implementation of a remote direct memory access (RDMA) technology.

Reference: CHAPTER 13. CONFIGURE INFINIBAND AND RDMA NETWORKS Libfabric supports a variety of high-performance fabrics and networking hardware. It will run over standard TCP and UDP networks, high performance fabrics such as Omni-Path Architecture, InfiniBand, Cray GNI, Blue Gene Architecture, iWarp RDMA Ethernet, RDMA over Converged Ethernet (RoCE).

IB is high-performance because of no kernel involvement (hence, user-level) for operations that involve transmission/reception of data, unlike TCP/IP. The kernel is involved only in the creation of resources used for issuing data transmission/reception. Additionally, unlike TCP/IP, the InfiniBand interface permits RDMA operations (remote reads, writes, atomics, etc.).

libibverbs is the software component (Verbs API) of the

IB interface. As sockets is to TCP/IP,

libibverbs is to IB.

The hardware component of IB is where different vendors come into play. The IB interface is abstract; hence, multiple vendors can have different implementations of the IB specification.

Mellanox Technologies has been an active, prominent InfiniBand

hardware vendor. In addition to meeting the IB hardware specifications

in the NIC design, the vendors have to support the

libibverbs API by providing a user-space driver and a

kernel-space driver that actually do the work (of setting up resources

on the NIC) when a libibverbs function such as

ibv_open_device is called. These vendor-specific libraries

and kernel modules are a standard part of the OFED. The vendor-specific

user-space libraries are called providers in rdma-core. Mellanox OFED

(MOFED) is Mellanox’s implementation of the OFED libraries and kernel

modules. MOFED contains certain optimizations that are targeted towards

Mellanox hardware (the mlx4 and mlx5 providers) but haven’t been

incorporated into OFED yet.

Alongside InfiniBand, several other user-level networking interfaces

exist. Typically they are proprietary and vendor-specific. Cray has the

uGNI interface, Intel Omni-Path has PSM2, Cisco usNIC, etc. The

underlying concepts (message queues, completion queues, registered

memory, etc.) between the different interfaces are similar with certain

differences in capabilities and semantics. The Open Fabrics Interface

(OFI) intends to unify all of the available interfaces by providing an

abstract API: libfabric. Each vendor will then support the

OFI through its libfabric-provider that will call

corresponding functions in its own interface. libfabric is

fairly recent API and intends to serve a level of abstraction higher

than that of libibverbs.

Reference: For the RDMA novice: libfabric, libibverbs, InfiniBand, OFED, MOFED?

Fabric control in Intel MPI reports that Intel MPI 2019 has issues with AMD processors. This is confirmed by Intel.

/etc/security/limits.conf and

/etc/security/limits.d/20-nproc.conf sets the limit of

processes.

Set up Message Passing Interface for HPC gives some commands for running MPI.

Unified Communication X (UCX) is a framework of communication APIs for HPC. It is optimized for MPI communication over InfiniBand and works with many MPI implementations such as OpenMPI and MPICH.

For now, I still cannot understand what UCX is. Check UCX FAQ

Some relevant issues on the Intel forum:

MPI_IRECV sporadically hangs for Intel 2019/2020 but not Intel 2018

Intel MPI update 7 on Mellanox IB causes mpi processes to hang

MPI myrank debugging Other Intel articles

Improve Performance and Stability with Intel® MPI Library on InfiniBand

Check OpenMPI FAQ